Let's say you're writing an API service. You need this API to be highly available, distributed, fast... the requirements are several pages long. The problem with API calls is, that one call might not use the same cache objects as another, uses different data sources due to partitioning or other technical reasons.

A typical PHP programmer might just create an instance of every cache class, database class and others he might need. This way typical PHP applications end up creating objects which are never used during the course of execution. You can make some assumptions that optimize a few of these cases away, but you usually have overhead.

Your API service needs to be fast. I'm trying to keep everything below 10ms, with access to data from SQL databases and cache servers. Connecting to them takes time. I usually don't hit the 10ms goal without bypassing PHP altogether. My (old but current) API layer currently pushes everything out at 22ms and 30ms, at the 50th and 95th percentile.

You live you learn - I instantiate database objects and cache objects and things I don't need which take up valuable time from creating and using only what you need. So:

- I needed a way to create and connect only to those services I actually need to run. If I only need to connect to Redis, I don’t need to connect to MySQL as well.

- Leverage PHP language constructs to this end. Injecting dependencies should be language driven, not programmer driven.

I want you to think about this. Language driven vs. programmer driven - this is the main point. When it comes to programmer driven dependency injection, it will happen that your programmer(s) will be using their time writing methods that will get, set and report missing dependencies. It is a flawed concept from the beginning, as it introduces human error into the equation.

The programmer can and will forget to pass a dependency into an object causing a fatal error which doesn’t trigger an exception that could be caught. The problems mount - the programmer requires attention to detail and discipline, and uses his time writing boiler plate code instead of developing new features.

You can reduce this risk by implementing your dependencies as language driven constructs. PHP 5.4 introduced traits that could be leveraged to infer dependencies to be injected into objects, after they are instantiated. A typical execution flow would then be:

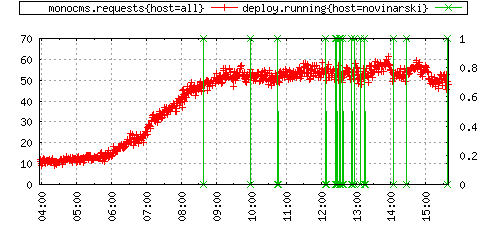

Green lines are code deploys and red ones are requests going to a part of the cluster. I had a big deploy today, and I'm glad to come out of it relatively unscathed.

Green lines are code deploys and red ones are requests going to a part of the cluster. I had a big deploy today, and I'm glad to come out of it relatively unscathed. If I could give advice to any software developer it would be this:

Plan your software wisely. If the client needs new features - think about them. Don't just think about if they scale, think about where the project is going. Think about the work you'll do now, and the work you or someone else might have to do down the line because of your decisions. Having a procedure how to scale the work that the client sees is better than having to scale the part of your software which the client never gets to see. Enable your client, don't let your client disable you. Sometimes rebuilding is the only option - and if it comes to that, you most certainly didn't follow my advice up to this point.

Learn from your mistakes and rebuild your ruins well.

- Tit Petric

If I could give advice to any software developer it would be this:

Plan your software wisely. If the client needs new features - think about them. Don't just think about if they scale, think about where the project is going. Think about the work you'll do now, and the work you or someone else might have to do down the line because of your decisions. Having a procedure how to scale the work that the client sees is better than having to scale the part of your software which the client never gets to see. Enable your client, don't let your client disable you. Sometimes rebuilding is the only option - and if it comes to that, you most certainly didn't follow my advice up to this point.

Learn from your mistakes and rebuild your ruins well.

- Tit Petric It's days like this where I love my job. I'm implementing back pressure for API calls that communicate to an external service. Depending on how overloaded the external service is, my API interface adapts to use caching more extensively, or to use a service friendly request retry strategy, minimizing impact on infrastructure and possibly even resolving problems when they occur. This is done by keeping track of a lot of data - timeouts, error responses, request duration, ratios between failed and successful requests,...

It's a nice day when I have problems like this.

- Tit Petric

It's days like this where I love my job. I'm implementing back pressure for API calls that communicate to an external service. Depending on how overloaded the external service is, my API interface adapts to use caching more extensively, or to use a service friendly request retry strategy, minimizing impact on infrastructure and possibly even resolving problems when they occur. This is done by keeping track of a lot of data - timeouts, error responses, request duration, ratios between failed and successful requests,...

It's a nice day when I have problems like this.

- Tit Petric Let's say you're writing an API service. You need this API to be highly available, distributed, fast... the requirements are several pages long. The problem with API calls is, that one call might not use the same cache objects as another, uses different data sources due to partitioning or other technical reasons.

A typical PHP programmer might just create an instance of every cache class, database class and others he might need. This way typical PHP applications end up creating objects which are never used during the course of execution. You can make some assumptions that optimize a few of these cases away, but you usually have overhead.

Let's say you're writing an API service. You need this API to be highly available, distributed, fast... the requirements are several pages long. The problem with API calls is, that one call might not use the same cache objects as another, uses different data sources due to partitioning or other technical reasons.

A typical PHP programmer might just create an instance of every cache class, database class and others he might need. This way typical PHP applications end up creating objects which are never used during the course of execution. You can make some assumptions that optimize a few of these cases away, but you usually have overhead.